Updated: June 9, 2023.

Looking to acquire some practical SEO knowledge? Here is a list of top 100 SEO mistakes I identified in the last 100 SEO audits I conducted.

I am a technical SEO specializing in performing advanced and in-depth SEO audits that often take me 20-40 hours to complete.

I use 25+various tools to audit a single website so that I can have the entire picture of its SEO well-being.

Over the last 2 years, I’ve performed around 100 audits and identified various SEO mistakes.

In this list, I am sharing with you the 99+ ugly SEO mistakes I identified in the last 100 SEO audits I have performed.

Whether you are an SEO or a website owner, I believe you can really learn a lot from this list. Let’s dive in!

99+ ugly SEO mistakes to avoid

The SEO mistakes I list here are not in any special order.

To compile this list, I simply went through the audits I had performed and hand-picked the most interesting SEO mistakes.

Under each mistake, you will find the information about its type (technical, on-page, etc.) and severity (low, medium, high, and critical).

These sites definitely did not follow the SEO best practices outlined by Google.

❗I am not able to disclose specific domains and in most cases, these issues have already been resolved so where possible I share a screenshot either from my audit or from a different site with that error.

Discussion about SEO mistakes

Here is the video where Dan Shure and I talk about some of the SEO mistakes from this list. Enjoy!

Low-value links

Type: on-page SEO

Severity: high

This website used “Read more” links on all pages, including the blog page, homepage, and category pages. Practically all internal links had the same anchor text of “Read more”.

This is one of the most common mistakes I encounter on the websites I audit.

I believe that websites should always use descriptive anchor text for internal links to help Google understand the topic of the linked page.

So, instead of using “Read more” in an internal link to my guide on SEO audits, I use “how to do an SEO audit”.

The developer of the site removed “Read more” and make titles of the articles links. This site’s visibility went through the roof.

“Load more” instead of pagination

Type: technical SEO

Severity: critical

“Load more” requires a user to click to view more content. Googlebot does not click on buttons and may not see the content that is displayed after clicking on “Load more”.

The site was using “Load more” on the blog page and all category pages which by default displayed only four blog posts.

To make things even worse, all blog categories had a no-index tag. As a result, Google did not even index the majority of articles.

No internal linking structure on top of “Load more” & “Continue reading”

Type: on-page SEO

Severity: critical

Internal linking is undoubtedly one of the most important aspects of SEO. This site had practically no internal linking structure:

- The site had one web page (homepage) whose specific parts were linked from the main menu with the help of jump links. You clicked on a specific menu element and you jumped to a specific section of the homepage.

- The site had a blog but by default, it displayed only six articles. The links to these articles were implemented through “Continue reading”.

- The remaining articles were visible only upon clicking “Load more”.

- There was no internal linking between these articles.

As a result, only the homepage was indexed and none of the articles was!

Homepage content invisible to Google

Type: technical SEO

Severity: critical

At a first glance, this site looked like a standard small-business WordPress site.

However, as I always do, crawled the site with JavaScript rendering and checked the site’s screenshot in PSI and Rendertron.

To my surprise, the content of the homepage was invisible. To confirm this, I did a site: search with a sentence copied from the homepage in quotation marks. No results were returned.

Googlebot is getting better at crawling and understanding JavaScript but in this particular case, the content of the homepage was not visible to Google.

Type: on-page SEO

Severity: medium

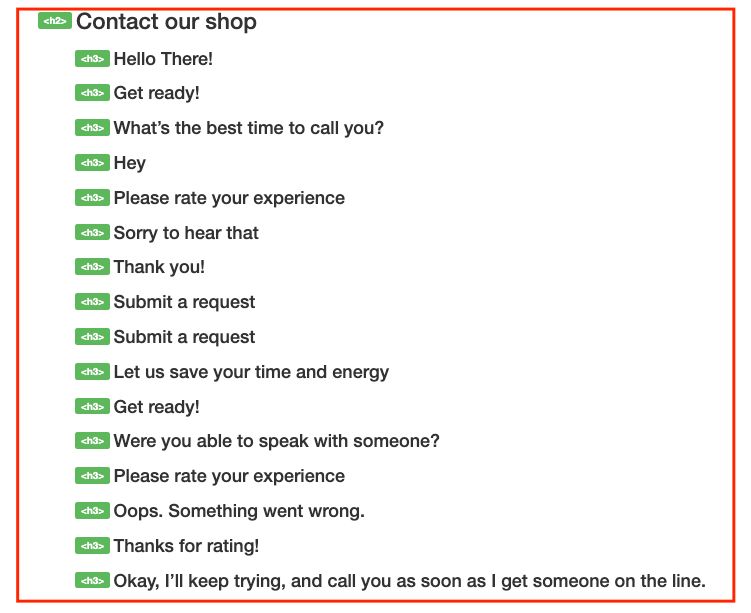

Headings should be used to divide the content of the page into specific sections. However, more often than not elements such as cookie notices or chat functionalities become headings.

I actually had two websites with these issues:

- An e-commerce website that had no headings other than the entire cookie notice element with different choice options marked as headings of different levels.

- A small-business site with chat functionality where all possible chatbot responses were injected as headings! This looked both funny and scary.

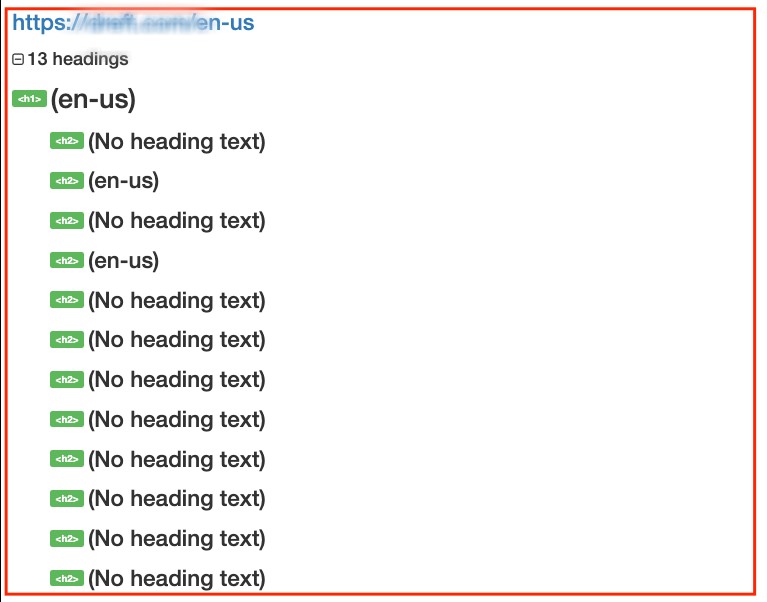

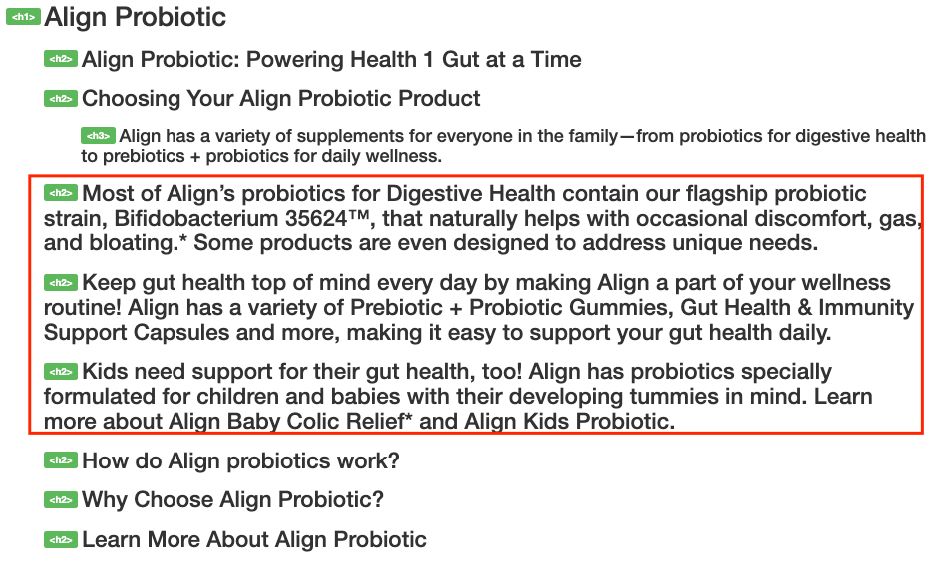

The corrupt and/or overly complicated structure of headings

Type: technical SEO

Severity: medium

Many things can go wrong with headings. In the case of this site, the homepage had more than 200+ headings of various levels and lengths.

In some cases, entire sentences were marked as headings or single numbers. This site was a perfect example of an (incorrect) use of headings for styling purposes.

Random or no ALT text

Type: accessibility/user experience

Severity: medium

This is another very common SEO mistake that usually takes one of these two forms:

- The website has a lot of high-value images but does not use ALT text anywhere. This is a huge SEO opportunity missed if these are original images that potential clients may want to find using Google Image Search. I audited at least a few e-commerce sites whose images did not have the ALT text.

- The website uses stock images and uploads them to the site without any modification of the file name, file size, or image EXIF data. The stock image descriptions are often used as ALT text of these images. Not only is it a bad user experience, but these mages stand absolutely no chance of ranking in Google Image Search.

Canonical links pointing to non-indexable pages

Type: technical SEO

Severity: critical

I see this mistake definitely more often than I should.

In the case of this small e-commerce site, the main product pages had canonical links pointing to non-indexable default empty product pages (the site had custom product pages with different URLs). Not surprisingly, neither default nor custom product pages were indexed by Google.

A canonical link is treated as a hint by Google but in such a case it may lead to the page not getting indexed by Google or being removed from the index (if this error happens after a migration, for example).

Duplicate homepages with incorrect redirects

Type: technical SEO

Severity: high

This was a really ugly SEO mistake.

The site (a site of a bank) had two versions of the homepage, such as domain.com and domain.com/index.html. There were no canonical link elements used.

In the website’s internal linking, all links were pointing to the .com/index.html. The /index.html version was 302-redirected to .com.

302 redirect (depending on how long is in place) is treated as a temporary implementation and may result in the redirected URL being indexed, not the target URL.

This was exactly the issue of this website. The .com (canonical) version of the homepage was not indexed while .com/index.html was.

Menu links pointing to staging URLs

Type: technical SEO

Severity: high

This was a freshly-redesigned e-commerce website and this SEO mistake was really UGLY.

All links from the main menu and the homepage were still pointing to the staging URLs which had a no-index tag.

The result was a huge traffic loss after the migration and deindexation of some important product and product category pages.

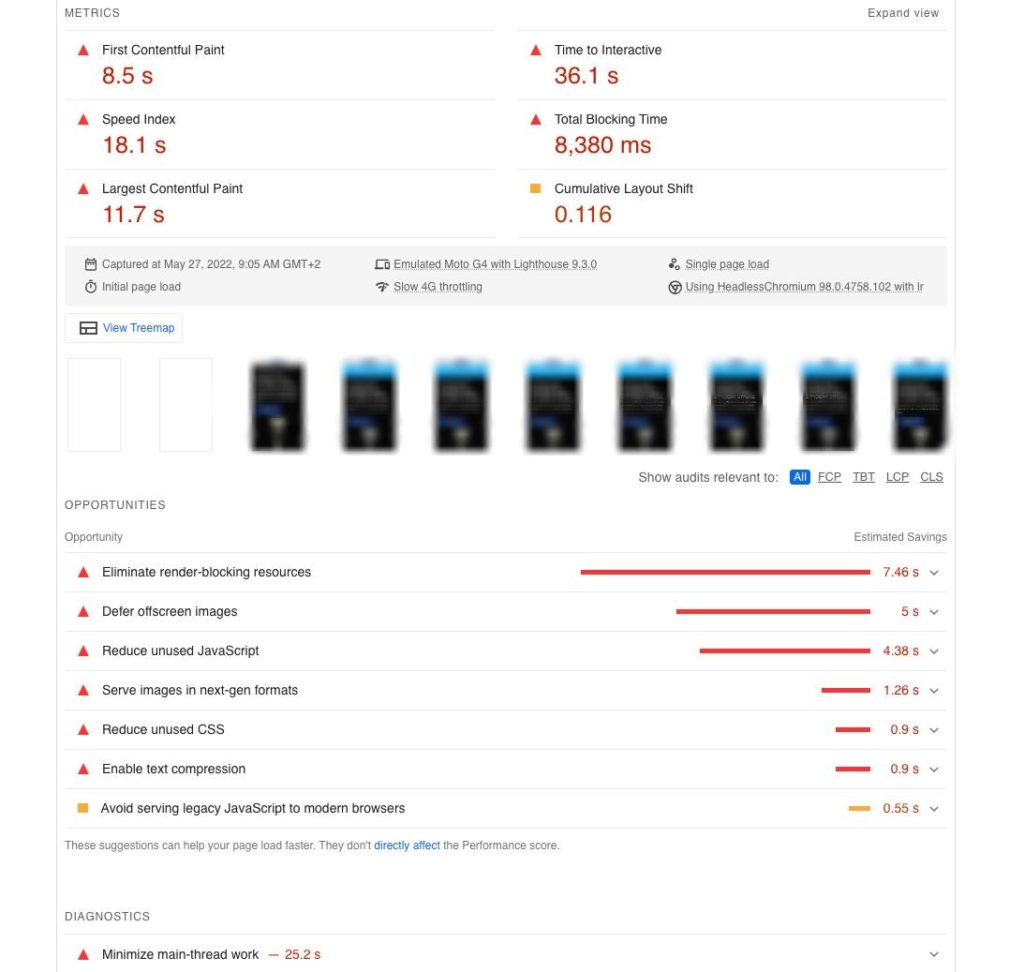

Horrendous speed scores

Type: technical SEO

Severity: high

In this case, I was performing speed & performance audits of various brands of Procter & Gamble.

The speed & performance scores in some cases were very bad and some sites needed 2 or more minutes to fully load or become fully interactive.

Speed may be a tiny ranking factor or a tie-breaker but if a site’s speed is in minutes, then I bet it will have a negative impact on user experience and – consequently – SEO.

No text on the homepage

Type: on-page SEO

Severity: medium

This is again the mistake I wish I could see less often.

In the case of this website (e-commerce), all content on the homepage was in the form of sliders and graphic banners. There was no single sentence or a heading.

For Google, that was a basically empty page that did not deserve to rank for anything and was unable to transfer its authority to other web pages of the site.

No Schema

Type: enhancements

Severity: medium

Again and again, I see sites, including WordPress sites, that use no structured data, even for basic stuff like Organization, Breadcrumbs, or FAQ.

Structured data may be a bit more advanced stuff but it has never been easier to add it to your website.

You don’t need to know how to code to do that. All you need to do is set up a plugin (such as Rank Math) and add at least basic Schema types to your website.

Type: on-page SEO

Severity: high

This was the mistake I detected on an e-commerce website.

This website had a lot of types of products and each product had different variations. Each product variation had a separate URL but the title tags and H1 tags of these URLs were all identical.

This was a very ugly SEO mistake that basically prevented product variations from ranking for a lot of long-tail phrases with modifiers like color, size, style, etc.

Type: technical SEO

Severity: high

Fortunately, I see fewer and fewer of these mistakes but they still happen.

This was a blog that used both tags and categories and did not use them in the correct way:

- Tags were the same as categories.

- Both tags and categories were indexable.

- Tag and category pages listed the same posts.

This resulted in duplication which resulted in both tag and category pages not ranking for anything.

No optimization of images & graphics

Type: technical SEO

Severity: medium

This was a WordPress website with a custom design with a lot of curves, different shapes, and photos of people.

All the graphics and photos used were in original sizes and were PNG files. Some files weighed as much as 5 MB.

Needless to say, this slowed down the site immensely and made it hard to use on a mobile device with a poorer connection.

FAQs with no FAQ Schema

Type: technical SEO

Severity: medium

I see more and more sites having FAQ sections, which is great. Unfortunately, more often than not, FAQ sections are not marked with FAQ Schema.

This was another small e-commerce site that had really great content and a lot of E-A-T. It had an FAQ section under each product but the questions were not marked with FAQ Schema.

Lorem Ipsum content on the live site

Type: User experience

Severity: high

This is quite common with website redesigns and migrations. I have recently audited a freshly-redesigned website.

One of the first things I noticed (during a manual review of the website I always do at the start of the audit) was that on the homepage and on the blog page there was still a lot of “Lorem ipsum” content.

Fortunately, it had been fixed before anyone but me noticed.

Check my website redesign SEO checklist.

A no-index tag on the homepage

Type: technical SEO

Severity: high

This was a very quick audit. My task was to investigate why the site does not rank for its branded queries.

I was ready for some deep investigation but it turned out that the site simply had a no-index tag on the homepage.

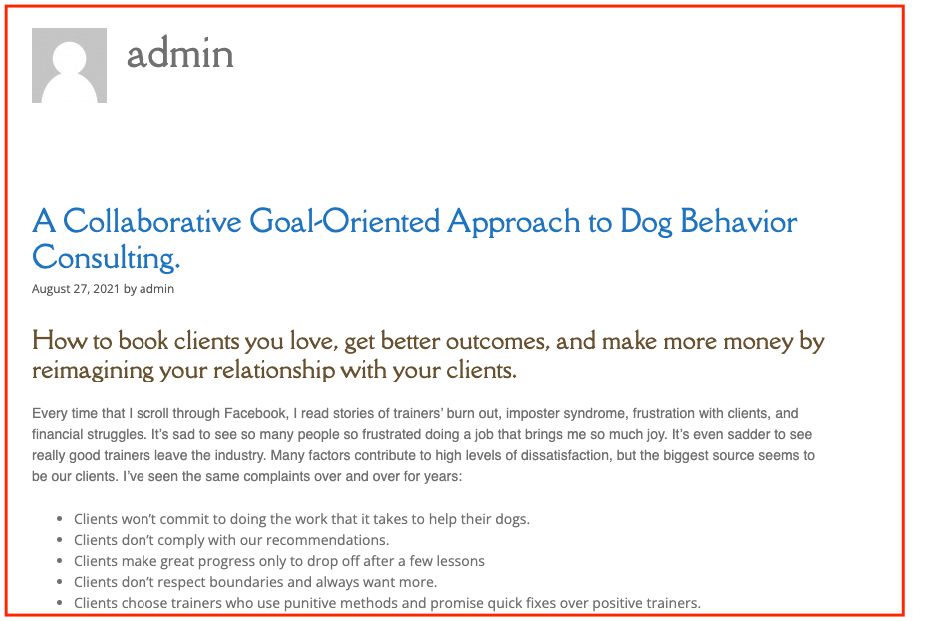

Author box with “Admin”

Type: E-A-T

Severity: high

Here I was doing an E-A-T audit of the website which was a YMYL website.

This really struck me because the authors of the site were indeed experts on the matter but the author bio on blog posts showed “Admin”.

When it comes to the T – trust – in E-A-T, this was a very negative signal sent both to Google and users.

Links that do not look like links

Type: user experience

Severity: high

This was a custom-designed lawyer website that had internal links styled like normal text. It was not possible to determine what is a link and what is not.

As a result, users rarely clicked on internal links pointing to important pages or clicked them accidentally.

The intent behind this was not to manipulate Google but this was a bad user experience.

When it comes to Google, I believe the links implemented this way not only misled users but also carried less “SEO” juice than normal (underlined) links.

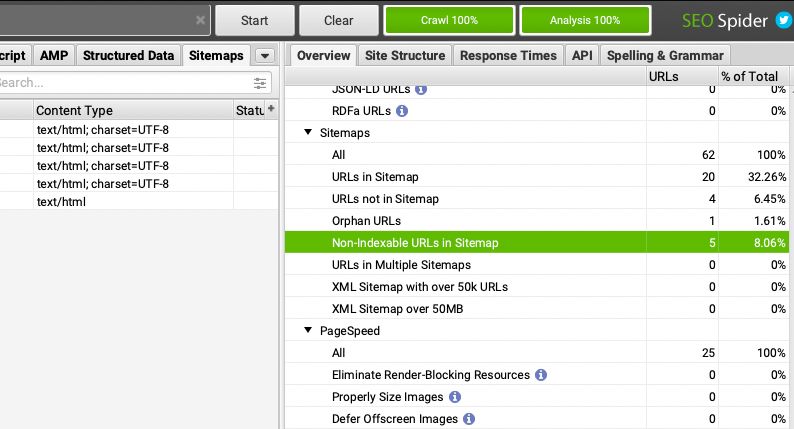

Non-indexable URLs in the XML sitemap

Type: technical SEO

Severity: medium

Having non-indexable URLs in the XML sitemap submitted to Google is simply a waste of Google’s time (i.e. crawl budget).

Of course, this is not a problem with small (a couple of hundreds or thousands of pages) websites which submit a bunch of non-indexable URLs in XML sitemaps.

However, with huge e-commerce sites, this indeed can become an issue.

The site I was auditing was a very huge e-commerce site (millions of pages) that had hundreds of thousands of non-indexable pages in XML sitemaps.

The main problem this site was dealing with was that 25% of its indexable pages were put into the “Discovered currently not indexed” bucket in GSC and Google wasn’t even crawling them.

Was Google spending its resources where it shouldn’t have?

No text links

Type: technical SEO

Severity: high

It is nice to have beautiful-looking boxes for highlighting website sections or articles.

However, this particular site did not use text links at all and all links were implemented in the form of such boxes where the entire element was a link wrapped in <div>.

My recommendation was to remove the link from the entire element and instead make the title of the article a text link.

No redirects from WWW/NON-WWW and HTTP

Type: technical SEO

Severity: high

In most cases, Google can deal with sites that do not have redirects from www to non-www (or vice versa) or HTTP to HTTPS and chooses the canonical link element correctly.

However, in the case of the site I was auditing the results were devastating. The site had “www” before the migration. After migration, the “www” was removed and no redirects were implemented.

The site lost 90% of traffic and rankings and never fully recovered because I audited it 6 months after this unsuccessful migration.

The same H1 on all pages

Type: on-page SEO

Severity: high

This was another e-commerce site and its mistake was simple. All 2000 pages had the exact same H1 with the name of the store.

The site was struggling to rank for anything and I strongly believe that this was one of the things that contributed to that.

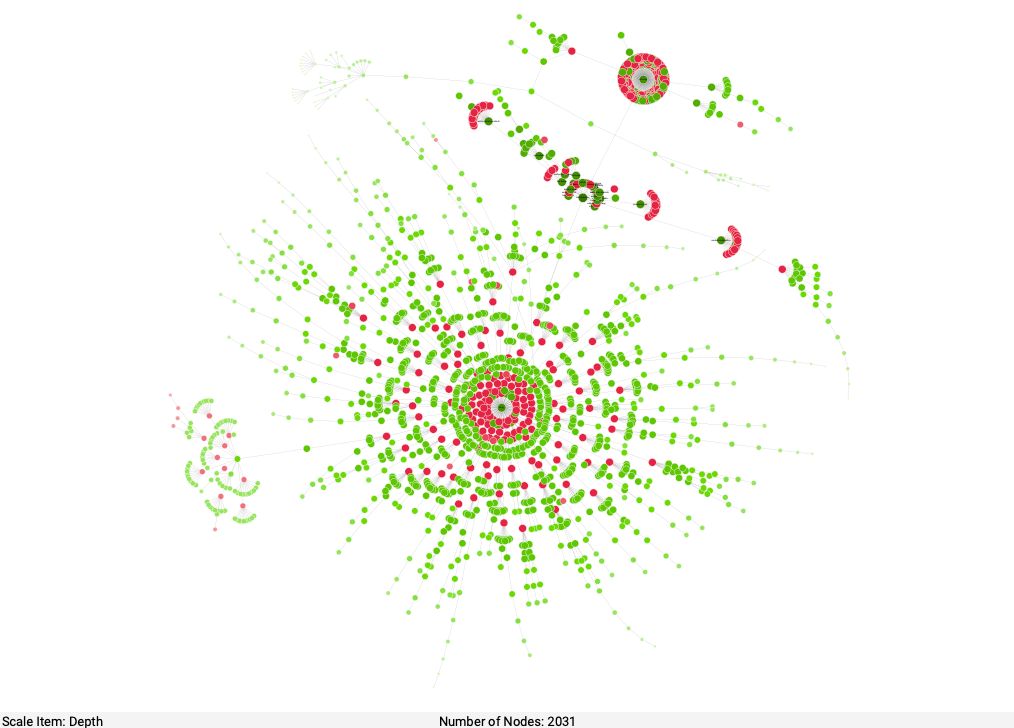

The highly complex structure of the website

Type: technical SEO

Severity: high

Whenever I audit a site, I always check its structure visualization in Screaming Frog and/or Sitebulb.

I love seeing simple and clear structures. Sometimes, however, I see horrible things.

This is what I saw on one of the recently-audited websites.

The issue the site had was incorrect internal linking, no pagination, no-index tag on category pages, and a lot of broken links.

No blog section

Type: general

Severity: high

In 99% of cases, the best way to grow the site’s organic traffic is to have the blog section with frequently published articles targeting specific keywords/topics.

This example comes from a small e-commerce website selling a bunch of products. This site was very small (10+ pages) and had trouble growing organic traffic.

What I suggested doing was creating a blog section with various how-to and informational articles relating to the products.

70+ outdated plugins running on the website

Type: WordPress

Severity: high

This ugly SEO mistake can quickly become super ugly if it gets out of control.

This is unfortunately what happened with this site.

My task was to find the cause of very poor performance and speed. If I had only been looking at Google PageSpeed Insights, I wouldn’t have got the entire picture.

Fortunately, I asked the owner (as I usually do) to give me access to the WordPress dashboard. What I saw was really ugly.

The site was literally running 70+ WordPress plugins. Most of them were outdated and not used by the site (but were active). I really had a lot of cleaning to do.

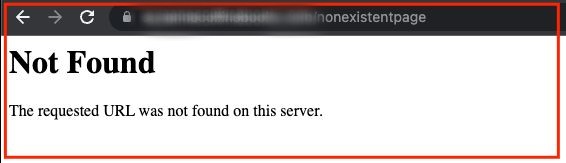

No custom 404 page

Type: user experience/technical SEO

Severity: high

Whether you like it or not, your users will sooner or later land on a non-existent page on your website.

If you present them with a nice-looking custom 404 that invites them to explore other parts of your website, you stand a chance of not losing them entirely.

If, on the other hand, your users see an ugly red-while error page that looks totally different than the rest of your site, then you cannot expect from them anything other than clicking the back button.

I saw this error on multiple occasions on e-commerce websites running custom CMSes that do not have a custom 404 page by default.

Mixed content after server change

Type: technical SEO/user experience

Severity: high

I spotted this ugly SEO mistake on a WordPress website that had just been moved to a new server.

The site had an SSL certificate but some of the links and resources were still being loaded over HTTP.

With WordPress sites especially, there is a very quick fix for that, so the site was fixed in no time.

Empty links “#”

Type: technical SEO/user experience

Severity: high

This is another very frequent mistake I see in new freshly-developed websites. The person who was adding the content and links simply forgot to update all links and left a few “#” here and there.

This is not a tragic thing in terms of SEO but can be confusing to users and possibly to search engines if we forget to add a very important internal link.

Targeting the same keywords on all pages

Type: SEO

Severity: high

This is a very serious strategic SEO mistake.

This was an e-commerce website that specialized in selling one type of product and targeted one phrase (a very general one) on all its web pages.

The site was selling sports shoes. It had 1000 different types of shoes. Every product page was targeting the exact same phrase “shoes” instead of focusing on a specific product like “Nike running shoes”.

Unsurprisingly, none of the web pages ranked for anything.

I did keyword research and mapped the correct and unique keywords to each URL.

500K excluded pages in GSC

Type: technical SEO

Severity: high

Depending on what you see in the Excluded report in GSC, it may or may not be a problem.

In the case of this website, it was a huge problem. The site had been hacked with no one noticing that for many months.

The number of excluded spammy pages in GSC was growing rapidly.

There was a lot of cleaning up to do but we did it and the number of excluded pages finally started to fall down.

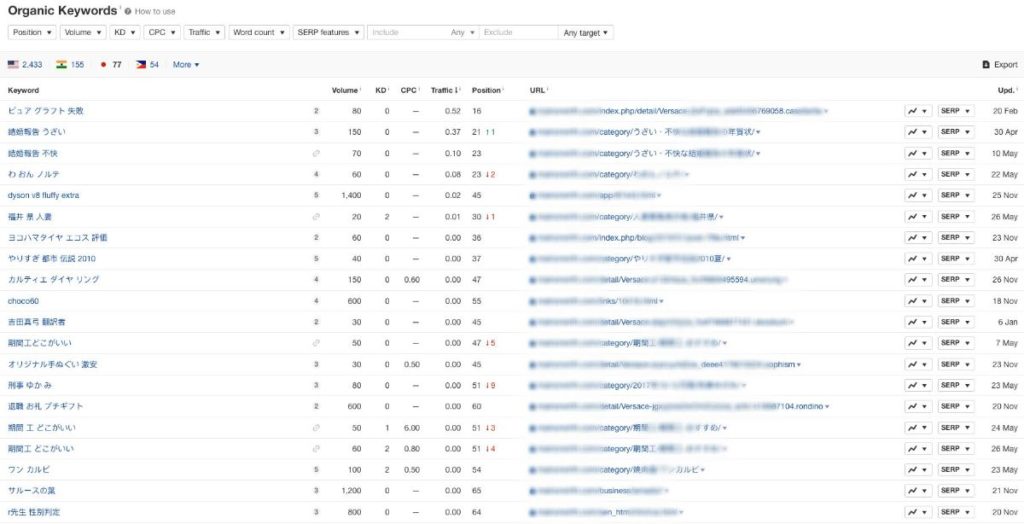

Chinese characters in search results

Type: security

Severity: high

This error is similar to the one above. If you don’t have access to the GSC data to check what is in the Coverage report, you definitely need to check the site with the site: command.

In the case of this site, I discovered that the site has 60K spammy Chinese pages in the index.

The hack was so serious that even Ahrefs was showing thousands of Chinese keywords as organic keywords of this site.

This completely distorted Ahrefs organic visibility and organic keywords reports.

To speed up the deindexation of these pages, I served 410 status codes.

Type: technical SEO

Severity: high

This was a relatively small site of a US-based business. The site had a total of 50 pages in English and about 10 pages in Spanish.

Unfortunately, no hreflang tags were used and as a result, English-speaking users were often served Spanish results and vice versa.

Host issues in the Crawl Stats report in GSC

Type: technical SEO

Severity: high

Host issues in the Crawl Stats report in GSC mean that the site was not available for Google. If this situation pertains, Google may start deindexing the site.

The knowledge of those issues can be extremely useful and can save you a lot of trouble.

That’s why I always insist on getting access to the GSC data so that I can really know what is going on with the website from the inside (and look at it through Google’s eyes).

This was a lawyer website that was using poor hosting that had a lot of issues. We changed the server and all issues were quickly resolved.

Breadcrumbs with no Schema.org

Type: enhancements

Severity: high

This is a very common mistake I see. The site has breadcrumbs but does not use Schema to give these breadcrumbs more meaning.

Breadcrumbs help users understand where in the structure of the website they are. For crawlers, breadcrumbs are simply internal links to other pages on the website.

However, if we used Breadcrumb Schema for breadcrumbs, we will add more context for search engines and we may be displayed in a more prominent way in search results (rich results).

Type: E-A-T/user experience

Severity: high

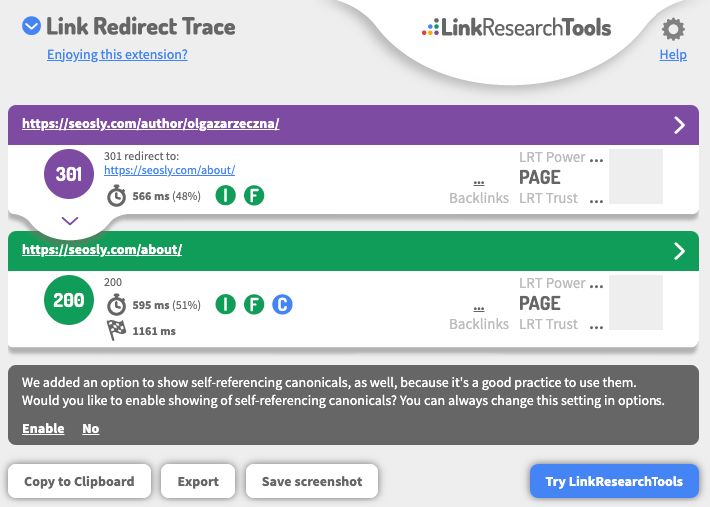

This is a very common mistake I see on WordPress sites. The site I analyzed was a prominent example of that.

The site had an about page about the author and a standard WordPress author page like domain.com/author/name.

Some internal links pointed to the about page others to /author. External links also pointed to both, which dispersed the signals into two pages instead of one.

What we did was very simple. We redirected the WordPress author page to the about page. Within weeks, the about page started to gain more visibility.

In the case of my site, I have two author pages:

- https://seosly.com/about/ which is the main About page

- https://seosly.com/author/olgazarzeczna/ which is 301-redirected to the above About page.

Suspicious external links to low-quality sites

Type: E-A-T/quality

Severity: high

This was a really UGLY SEO mistake. This was the site of a brick-and-mortar business in California.

When crawling the site, I noticed the site has a lot of external links, sometimes even 20 external links from one page.

When I analyzed the links in detail, it turned out that these are the links to low-quality PBN-type of sites.

The owner of the site was not even aware of that.

It seems that these were the leftovers from the previous – and not necessarily ethical – SEO agency.

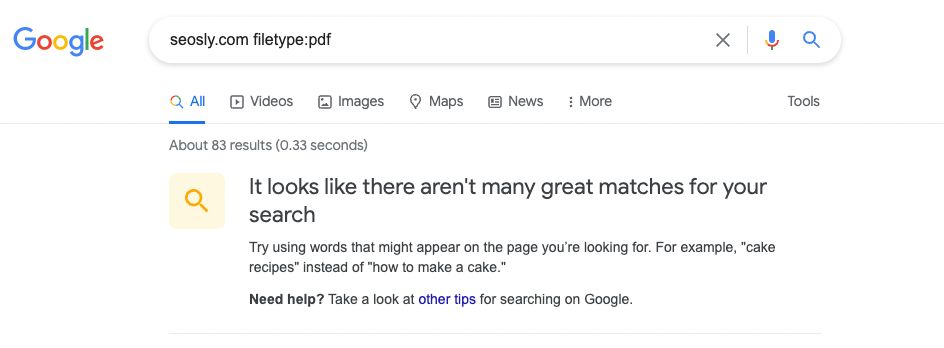

Pages or resources that should not be indexed by Google are indexed

Type: security/quality

Severity: high

Sometimes we do everything to make Google index our pages and Google just won’t. Other times we forget to add a no-index tag and Google indexes the content we want to hide immediately.

This was a sports website where you could buy ready gym exercise plans.

When auditing the site, I typed site:domain.com filetype:pdf and noticed that all paid plans are indexed and available in Google.

This was the case for many years and no one had an idea that just with the knowledge of the site: command anyone could get those plans for free.

All internal links redirected

Type: technical SEO

Severity: high

“Link juice” may not be fully transferred through a redirect so unless it’s absolutely necessary we should avoid redirects, especially in internal links.

This is a recent case of a WordPress website.

WordPress by default adds / at the end of URLs. If you type the address without /, it will be automatically redirected to the one with / at the end.

This website had all internal links implemented without /. As a result, each internal link went through at least one hop.

Homepage with the title tag of “Home”

Type: on-page SEO

Severity: high

Google is getting better and better at understanding websites even if they are not optimized for SEO.

But even so, it is always a good idea to optimize what is under our control. The title tag (or the title link) is fully under our control.

So let’s not call our homepage “Home” unless we want to/aspire to rank for “Home”.

P.S. We should not want to rank for “home” as this phrase is way too general and ranking for it is virtually impossible.

No headings at all

Type: on-page SEO

Severity: high

This was another e-commerce website.

The main issue it had was that except for one heading on the homepage, it did not use headings at all.

The site had product pages, category pages, and blog posts. No headings whatsoever.

It was a WordPress site created with Elementor, so fixing that was quick and easy.

Images implemented as background

Type: technical SEO

Severity: high

Images implemented with the help of CSS as background are treated as part of the layout and are not indexed by Google. Those images won’t show in Google Image Search.

If you use a lot of images (and especially original unique images of products), then you probably want them to be indexed and visible in Google Image Search.

This example comes from an e-commerce site with a custom WordPress design. The designer clearly did not know a lot about SEO because all product images (both on product pages and category pages) were implemented as background images.

The result? Absolutely no visibility from Google Image Search.

Blog in the subdomain

Type: technical SEO

Severity: high

I am not saying that having the blog in a subdomain is a critical SEO mistake but I am a strong believer in keeping the blog in the main domain.

Google treats subdomains as separate websites so any link your site gets supports either the main domain or the blog subdomain, not both.

If you keep everything in “one place”, you can accumulate more signals and use the blog to help your products rank highly.

The site I was auditing was a store with a blog in a subdomain. The blog was ranking nicely but the store practically had no visibility.

Type: E-A-T/quality

Severity: high

Google has no problems getting to know when you actually published or modified an article. Users – unless they are very tech-savvy – won’t know it.

It is a bad experience if a user cannot determine whether an article is up-to-date and is still relevant.

This was a huge tech website that had thousands of articles. The articles had no dates and no modification dates.

Technology especially is rapidly-changing so it could cause users to distrust the website.

The issue the site was facing was that it simply could not get out of the stagnation phase.

After a number of E-A-T optimizations and one core update, the site started to grow nicely.

WordPress admin area with no protection

Type: security

Severity: high

WordPress sites are especially vulnerable to hackers. If you don’t protect your admin area in any way, sooner or later your site will be hacked.

This was a very bad example. The site used standard /wp-admin/ URL to log in and standard “admin” user.

Adding to that, there was no limit on login attempts and no Captcha. The site was literally welcoming hackers and bots.

Fortunately, I noticed that early enough before anything bad happened.

Sitemap with HTTP links only

Type: technical SEO

Severity: medium

This was a very huge website with hundreds of thousands of web pages. It had 10 compressed sitemaps in the Gzip format.

The problem was that all URLs in the sitemap were HTTP while the site was using HTTPS. This caused Google to go through one hop for each URL indicated in the sitemap.

I believe in the case of huge websites this may have a negative impact on the crawl budget and this site had issues with Google crawling not frequently enough.

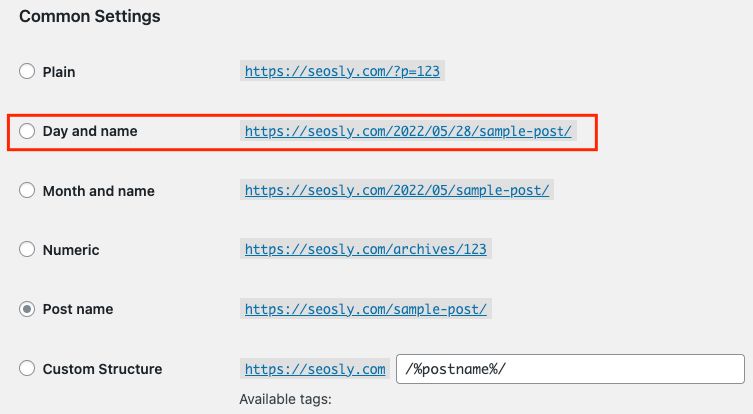

URL addresses (permalinks) with dates

Type: SEO/E-A-T

Severity: high

Having dates in permalinks is quite problematic for two reasons:

- Having old dates in URLs indicates the article may be outdated and may discourage users.

- Changing the URL of a well-ranked page may lead to a decrease in rankings and traffic.

The site that had this issue was the blog of a personal trainer. Some of his blog posts were published more than 10 years ago. He never changed the default WordPress permalink settings with dates in them.

Since his pages needed a lot of on-page SEO optimizations, we decided to change the URLs and implement 301 redirects.

The results were very nice. The site gained about 30% visibility thanks to our optimizations and the change of URLs did not seem to have any negative impact.

The website is available and indexable in two instances (staging and production)

Type: technical SEO

Severity: high

I wish I saw this less often. This was a website of a window manufacturer. They had just got a new site and I was supposed to audit it and recommend SEO optimizations.

The first thing I discovered was that both the new (production) URL and the staging URL were indexed in Google.

The brand searches returned a mix of production and staging URLs. Awful.

The site blocking any traffic coming from the US

Type: technical SEO

Severity: critical

The company reached out to me to help them investigate why Google was not indexing their UK-based store.

At a first glance, everything looked OK. The site did not have a no-index tag or did not block crawling in robots.txt.

However, a deeper investigation showed that the site was redirecting anyone coming from the US to their different store in the US (on a separate domain).

Since Google always crawls from the US, Googlebot was also redirected and never got a chance of crawling and indexing the UK-based store.

Competitor’s data in Schema

Type: technical SEO

Severity: medium

This was the site of a dentist in a big city in the US.

During my audit, I noticed that the Organization Schema contains information about a different business than the one the site was about.

I googled that business and found out it was a direct competitor.

It turned out that the previous SEO was copying the Schema of competitors but in a few instances forgot to change it. LOL.

All bots disallowed in robots.txt

Type: technical SEO

Severity: critical

I thought I would never see this mistake on an actual site. I knew it from numerous tutorials on robots.txt and Google documentation.

This was a relatively new site on a custom CMS. The problem they were facing was that Google was not crawling and indexing the site.

I didn’t even have to look into GSC. I only manually checked robots.txt and immediately knew the answer.

The site was barely functional on mobile

Type: user experience

Severity: critical

This was a custom-designed WordPress site of an online school. The site looked relatively OK on the desktop.

However, when I opened the site on my phone, it did not look OK at all. The layout was basically broken, headings were on top of each other, and photos were way too big.

It turned out that the designer forgot to design the site for mobile and the person coding the site also did not think about how the site would look on mobile.

Google Tag Manager code in <body>

Type: technical

Severity: critical

The site came to me with a complaint that their GA4 implementation does not work.

It turned out that they implemented GA4 through Google Tag Manager and the GTM code was added to the <body> instead of the <head>.

The consequences of such implementation may be way worse than GA4 or other tags not working.

We moved the code to the <head> and GA4 started to work!

Type: technical SEO

Severity: medium

This was actually a site of an SEO agency that reached out to me for help with their site.

They were strong believers in “PageRank sculpting” and – consequently – nofollowed all internal links in the footer and main menu.

They only left a few do-follow links to key pages with their offer and services on the homepage.

The site was completely stuck in terms of organic growth.

A few weeks after we “unlocked it”, the visibility in GSC started to go up.

Canonical tag on blog posts pointing to draft URLs

Type: technical SEO

Severity: critical

This was definitely a technical issue on a WordPress site but its consequences were devastating. The majority of well-ranked blog posts were removed from Google’s index.

Most blog posts had canonical links pointing to draft URLs which to Google return 404.

The site was custom-coded, went through an unsuccessful migration, and moved to a new server. I wasn’t able to definitely say what caused the issue.

However, in that case, it was enough that I spotted the actual reason for the site’s drop.

We fixed canonical tags and the site slowly started to recover.

Unmoderated comment section with do-follow links

Type: security/SEO/user experience

Severity: high

WordPress for at least some time has been adding a no-follow tag to links in comments.

However, on older blogs (which have not been updated for a long time), it is not uncommon to see spammy comments with do-follow links to spammy sites.

This was the personal blog of a traveler who reached out to me for help. He heard about something called SEO and wanted me to give him directions.

One of the first things I spotted was that the majority of his blog posts had hundreds of spammy comments with do-follow links to all sorts of sites.

There was absolutely no value in these comments, so we deleted them all.

Featured image covering the entire above-the-fold section

Type: SEO

Severity: high

This is the issue I uncovered in a custom-designed site of a law firm.

The blog posts were designed in such a way that a gigantic featured image was on top and below it was the H1 and the copy.

On mobile, you had to scroll a bit to see the actual title and the first paragraphs of the article.

I have not done any tests on that but I am a believer in the above-the-fold content.

We redesigned the site so that at first came the title (H1), then date, category, author name, one sentence, and the featured image.

The “last updated” updated date always showing today

Type: technical SEO/user experience

Severity: medium

This was an affiliate site that had 60+ review articles that pretended to be updated every day.

The site suffered heavily after the first Google Product Reviews update.

I implemented a number of E-A-T optimizations, one of them being an actual update of all articles and making sure the last updated date shows the actual date when the article was updated.

The site fully recovered after the third iteration of the Google Product Reviews update.

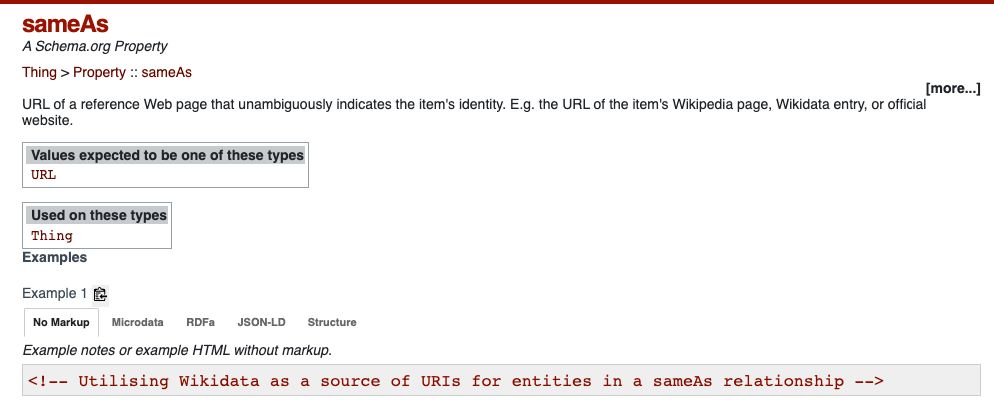

SameAs pointing to Wikipedia

Type: technical SEO

Severity: high

This was a very fresh thing. I was reviewing a site that had a manual penalty.

One of the things the site was doing was exactly what Dixon Jones talks about in his article about Schema stuffing.

The site heavily overused SameAs and pointed to hundreds of different entries in Wikipedia and Wikihow.

Below is the screenshot from Schema.org explaining the purpose of using Wikipedia in SameAs.

The site also stuffed keywords using Schema.

Type: content

Severity: high

This was another affiliate site. It was reviewing home appliances. Some of the posts were ranking OK but generally, all posts were roundup reviews with lists of appliances.

The author strongly believed in the ratio of the info to affiliate content and uploaded 50 short and low-quality articles to the site. These articles were recipes, which had nothing to do with the topic of this site. This was not a recipe site!

We removed all unrelated and thin content. The site recovered after a core update but unfortunately went down again after the last Google Product Reviews update.

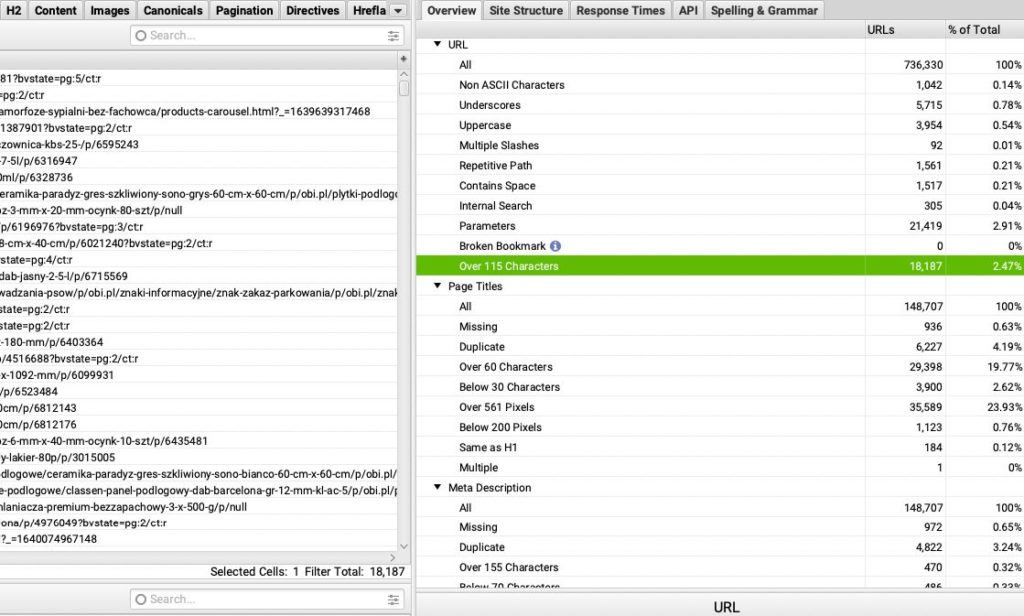

Horribly long URLs

Type: on-page SEO/user experience

Severity: medium

I am not sure what happened here but this WordPress site had horribly long URLs (the entire sentences were URLs).

I suppose this happened because the site had very long titles and the person who was publishing the articles did not really pay attention to the URLs that were formed.

Since this blog had no traffic and no visibility, we changed all URLs into shorter and friendlier ones.

Type: E-A-T

Severity: high

I believe that in most cases you won’t get away with not providing at least basic information about the author of the website.

This was an e-commerce site selling oils. Their authors did not want to show their faces or their names and there was no way of telling who is actually running the site.

This was a huge mistake in terms of E-A-T.

No address or phone number in an e-commerce site

Type: E-A-T

Severity: high

This was a small e-commerce site selling toys for dogs. A very nice site! However, it did not provide the address or a phone number.

Users were complaining that they cannot get in touch with the owner in any other way than e-mail.

If people buy from you, they should be able to call you or know where your business is located.

Grammatical and spelling mistakes

Type: E-A-T

Severity: high

This was a personal blog about technology run by a person who is not a native speaker of English.

This was a classic case where the owner thinks his site is super high-quality but when other people see the site they have a different opinion.

The biggest problem here was that the text was full of grammatical errors, spelling mistakes, and punctuation errors.

Google states clearly in its post about the quality that you should pay attention to grammar and spelling.

Type: E-A-T

Severity: high

This was an affiliate site reviewing health-related products, so it was heavily YMYL.

As is often the case with affiliate sites, the names of authors were fake and their photos were generated by AI.

However, the person behind the site was actually an expert in those products but just did not want to disclose himself.

What we did instead was to hire two doctors who were comfortable with showing their faces and names on the site and hired journalists who specialized in that area and wanted to author the articles.

Canonical links pointing to HTTTP

Type: technical SEO

Severity: critical

This was the mistake I noticed after crawling the site. The site was using HTTPS but somehow all canonical links pointed to HTTP versions.

It looked like a leftover from the HTTP version of the site from many years ago.

This was fortunately a quick fix.

An indexed URL blocked in robots.txt

Type: technical SEO

Severity: high/critical

This site wanted to remove a bunch of URLs – mostly PPC campaign URLs – from Google’s index.

These URLs were blocked in robots.txt and had no-index tags.

This prevented Google from crawling the URLs and even seeing the no-index tag.

The result: Not only did Google keep those URLs in its index but also displayed them horribly in SERPs.

Canonical set to the first page in the pagination series

Type: technical SEO

Severity: high

The paginated pages in the series are not duplicated so there is no need to canonicalize them to the first page.

The site with this issue was e-commerce with a lot of products and extensive paginated product categories.

Unfortunately, all paginated pages were canonicalized to the first page. As a result, Google wasn’t crawling the paginated pages much and a lot of products were not indexed.

A no-index on paginated pages

Type: technical SEO

Severity: high

This SEO mistake was even uglier.

This was a financial blog with a lot of articles and paginated category pages. Unfortunately, only the first page in the pagination was indexable.

The result was easy to predict. A lot of the URLs in the paginated pages were not even indexed.

Articles displayed from oldest to newest

Type: technical SEO

Severity: high/critical

This blog had issues with indexation and I wasn’t really surprised.

The blog did not have any category pages. It only had the main blog page with many paginated pages.

The main blog contained a link to the second paginated page. The second page contained the link to the third page, and so on.

Unfortunately, the freshest content was at the end of the pagination (page 20).

The result? The freshest content was 20+ clicks away from the homepage.

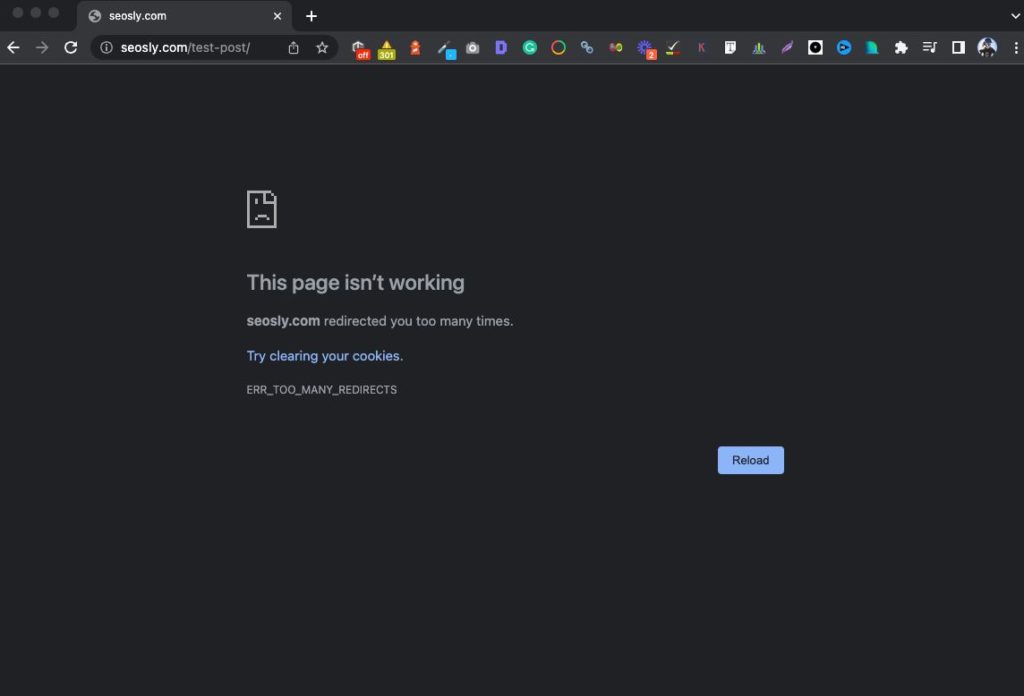

Redirect loops on some blog posts

Type: technical SEO

Severity: critical

On this website, some of the blog posts stopped working and had a redirect loop. My job was to investigate the issue and fix it.

It turned out that this site was using Rank Math and had the automatic redirection option turned on.

The author changed the URLs of some pages (which created a redirect to a new URL) and then changed these new URLs back to old ones (which created another redirection from the new to old URL).

The result was a redirect loop and deindexation of these pages. Fortunately, quickly after fixing the pages were indexed again and their rankings were restored.

Out-of-stock products with a no-index tag

Type: technical SEO

Severity: high

There is a debate in the SEO community about what to do with products that are temporarily out of stock.

I believe that adding a no-index tag to them is not a good option.

This e-commerce site lost a lot of visibility after no-indexing its out-of-stock products. It took the site almost a year to restore the rankings for these products.

Robots.txt returning 5xx

Type: technical SEO

Severity: high/critical

Robots.txt is the first place a crawler visits when crawling the site. If robots.txt returns status code 5xx, then Google will temporarily interpret that as if the site was fully disallowed.

Depending on the gravity of this issue, it may lead to serious problems, and Google entirely stopping crawling the site.

I did not have access to GSC to check this issue in the Google Search Console Crawl Stats report. I uncovered this issue with the help of Sitebulb.

100 videos on one page

Type: user experience/SEO

Severity: high

This is a very ugly SEO issue for a variety of reasons. This was a website of a dietician who recorded 100 (sic!) videos with different types of FAQs on weight loss, diets, and health in general.

This was super useful and high-quality content. Unfortunately, all these videos were added to one single page. Their thumbnails were very small. No caching or lazy loading was in place so the page took forever to load.

We split this page into 100 separate video pages each focusing on one question (something similar to what I am doing with SEO FAQs). The results were awesome.

ALT text stuffed with multiple keywords

Type: technical SEO

Severity: high

This site really wanted to rank for certain commercial keywords and stuffed them like crazy in the ALT text of images.

In addition, the site used only stock images, so the visibility of those images in Google Image Search was close to zero.

Abuse of anchor text in internal links

Type: technical SEO

Severity: high

It is more than recommended to use keywords in the anchor text of your internal links. However, this site definitely went overboard.

This was a site of a law firm that desperately wanted to rank for “lawyer near me”.

In the sidebar, they had a list of different types of lawyers + “near me”. I think the sidebar had about 50 links to different types of “near me” lawyers.

Keywords in URLs in a different language

Type: technical SEO/user experience

Severity: medium

This was a small Polish website that only had one language – Polish. However, all URLs were in English.

The structure and content of URL addresses are not a ranking factor but this was simply misleading to users.

The site had little to no visibility, so we translated the words in URLs into Polish and implemented 301 redirects.

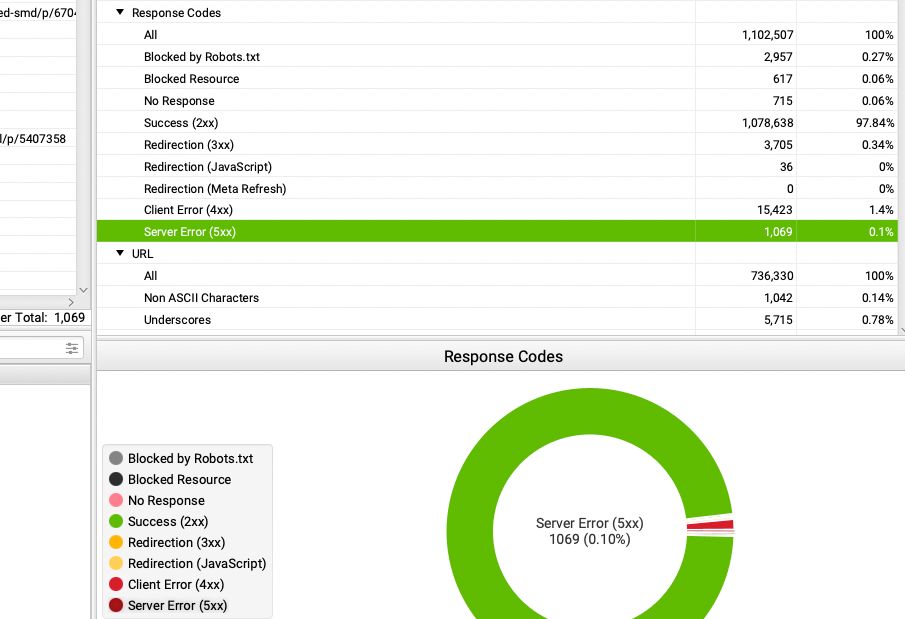

A lot of pages returning status code 500

Type: technical

Severity: critical

This site clearly had serious server issues. It was difficult to browse it without coming across a 5xx page.

I crawled the site and checked the reports in GSC. For the crawler, 50% of pages returned 5xx. In GSC there were a lot of 5xx pages reported as well.

The site was using very poor shared hosting. We moved it to Cloud Ways and the issue resolved itself. What a relief.

Three different SEO plugins

Type: technical SEO

Severity: high/critical

SEO plugins, such as RankMath or Yoast SEO can be super helpful for SEO.

This site, however, went overboard. It had 3 different types of SEO plugins actively used. Some of the settings were overriding one another or were in conflict.

This was a small site so we managed to clean this up quickly with no casualties.

Five different caching plugins

Type: technical SEO/user experience

Severity: high

This is a very similar story.

I was supposed to audit the site in terms of speed, performance & Core Web Vitals. The owner of the site said they had done everything and the site was still in the red area in PSI and could not pass Core Web Vitals.

It was enough for me to log in to the site and I immediately knew the answer.

The site was using 5 different and often conflicting caching plugins on top of the server caching provided by the hosting company.

I removed all these plugins and installed (and correctly configured)

Falsely claiming the articles were written by a famous doctor

Type: E-A-T

Severity: high

This was an affiliate site reviewing different types of supplements, which makes it a YMYL site.

My task was to do an E-A-T audit of the site.

The site suffered after the first Google Product Reviews update and introduced a bunch of quality optimizations.

One of those optimizations was to claim that every article has been medically reviewed by a very well-known doctor. This doctor, of course, has never reviewed the site or agreed to put his name there.

Whether or not Google knew the truth, my recommendation was to remove all these fake proofs of quality.

The site recovered after a ton of work and only after the third iteration of the Product Reviews Update.

Minute featured images

Type: on-page SEO

Severity: high

This was the blog of a financial company. The site wanted to be displayed in Google Discover.

The site had a lot of E-A-T improvements to make. However, one of the first things I noticed was that blog posts had no images except for tiny featured images (200x150px).

My recommendation (in line with Google Discover documentation) was to make sure that every article had a featured image of at least 1200px in width.

It took many months but the site finally started to get traffic from Google Discover.

Implementing important text as images

Type: SEO

Severity: high

This was a financial blog that had very high-quality content in the form of long guides and how-tos.

Unfortunately, all the bullet points, summaries, excerpts, or listings were implemented in the form of images (with no ALT text).

I believe it prevented the site from realizing its full SEO potential. We changed that and saw very nice improvements in practically all the articles.

Lack of any contextual linking between blog posts

Type: on-page SEO

Severity: high

I see it too often. This was a huge e-commerce website with an extensive blog with various guides, tutorials, and tips.

Blog articles linked to product pages but they never linked to other articles.

This was a huge SEO opportunity missed because these topics were ideal for siloing.

We did add contextual links and created virtual silos. The effects were really nice.

URL parameters not influencing the content displayed

Type: technical SEO

Severity: medium

I see this mistake less and less often but it still happens in the case of older sites.

This was an e-commerce site. You were able to apply different types of filters and sorting.

Some filters did not really change the products displayed, so in some cases, you could have 30 different URLs for the same set of products.

Google in most cases has no issues with this type of “technical duplication”. This did not cause any indexing or crawling problems for this site either.

But to be on the safe side, we added a canonical link element pointing to the URL without parameters.

Homepage ULRs with redirect chains

Type: technical SEO

Severity: medium

This site has unnecessarily complicated redirect paths for different versions of the homepage. In some cases, there were even 5-6 hops instead of one.

I did some cleaning up in .htaccess and we could forget about that issue.

Category pages with no unique text

Type: on-page SEO

Severity: medium

In e-commerce websites especially, it is important to have at least some text on category pages. This site had only an H1 and a listing of products. As a result, its category pages did not rank for anything.

We added relatively short descriptions, some headings, and an image, and in 4-6 weeks categories started to actually rank.

Broken internal and external links

Type: technical SEO

Severity: medium

Every site that is at least a few years old will have some broken internal and external links.

This website, however, had a ton of them in various places, such as lists of resources, citations, or links to other articles on the same site.

This was bad both for users and Google because some of those404 pages actually had a lot of high-authority backlinks.

Entire sentences marked as headings

Type: on-page SEO

Severity: medium

This site or – rather -its designer crossed the line.

H2 tags were used as a way to emphasize 1-2 sentences in each paragraph or section of the article. As a result, the articles often had 10-15 super long H2 tags.

We removed H2 tags and used <b> to make the text stand out a bit.

Type: on-page SEO

Severity: medium

It is hard to believe how many sites have headings wrong.

This site only used H1 tags. In some articles, there were as many as 20 H1 tags. We fixed these headings and improved the structure of those articles.

This was more of a best practice thing to do. The rankings did not really change after that.

Important product pages orphaned

Type: technical SEO

Severity: high

This was a huge e-commerce site selling clothes. It had millions of pages. The company hired me to investigate why their products are not getting indexed.

The reason was very simple. Around 100K product pages were orphaned. After improving the internal linking structure of this site, 99% of products were indexed.

No XML sitemap

Type: technical SEO

Severity: high

This was another huge site selling electronics. The site was using a non-standard CMS.

The site had 500K pages but about half of them were not indexed. The site had a bit too complex an internal structure and some products were 5-6 clicks away from the homepage.

The issue here was that the site did not have an XML sitemap (check how to find the sitemap of a website). After generating the XML sitemap and submitting it to GSC, most of the products were successfully indexed.

Important resources blocked in robots.txt

Type: technical SEO

Severity: critical

This was a classic JS – SEO mistake.

The robots.txt file was blocking important resources (images, JS, and CSS files) and as a result, Google was not able to render the site correctly and see all of its content.

The issue was quickly resolved after removing the disallow directives from robots.txt.

All external links with a no-follow tag

Type: technical SEO

Severity: high

This site was no-following all external links in order to avoid “PageRank leakage”.

Fortunately, I managed to convince the website owner that this is not the best idea in terms of SEO.

A broken XML sitemap URL in robots.txt

Type: technical SEO

Severity: high

This was not a critical issue because the site in question was a small one (100+ pages) but if this had been a huge site with millions of pages, the consequences would have been serious.

I fixed the sitemap link as a best SEO practice. This, of course, did not change anything in terms of SEO because all pages of the site were indexed.

Check: How to access robots.txt in WordPress

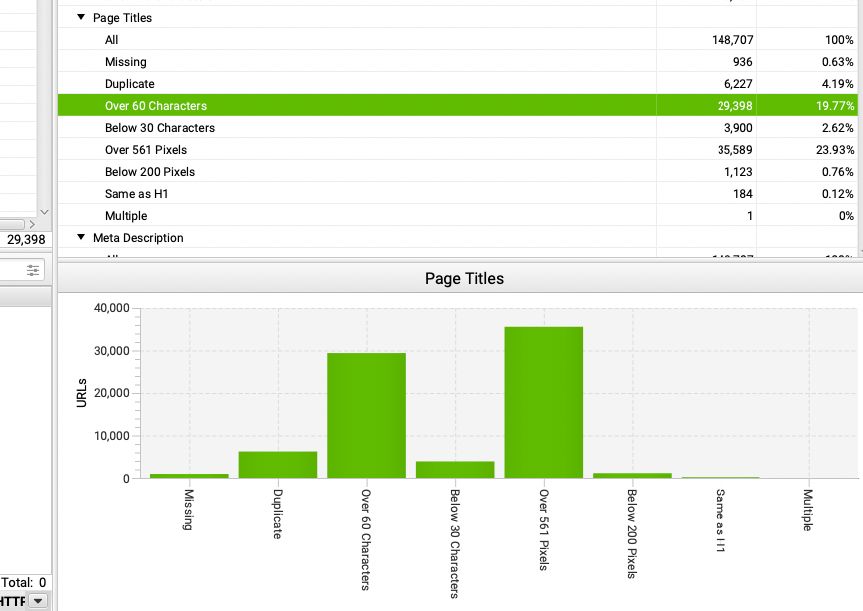

Extremely long title elements

Type: on-page SEO

Severity: high

This site was using extremely long titles which were often longer than 200 characters.

Using such long titles is like asking Google to rewrite them. This was what the site owner came to me with.

He wanted to prevent Google from overwriting these titles.

We shortened titles big time and Google stopped rewriting them or was rewriting them only slightly.

One page targeting multiple cities

Type: SEO

Severity: high

This was a criminal lawyer website. This lawyer had his law offices in 5 different cities and wanted to rank in all of them.

However, there was only one city page targeting all these five cities. The result was easy to predict. The site ranked for none of these cities.

We created 5 different city hubs, each focusing on one city and having multiple subpages relating to this one main city.

After six months, his law firm started to appear for local searches in all these five cities.

Extremely outdated content

Type: content/user experience

Severity: medium

This site displayed both dates and last modification dates.

Unfortunately, the freshest piece of content was from 5 years ago and the majority was at least 10 years old.

The site started to simply get old and lose visibility.

We did content pruning and content update. The site started to regain lost traffic and visibility after around 2 months.

Final words on SEO mistakes

This list could be way longer but when I started to hit the 10K-word mark I decided to stop! Now it’s time for you to share your top SEO mistakes.

You may like this article:

Olga, you’ve done it again. Thank you so much, and it is a great honor to be the first to comment on this. Just want to ask regarding the “One page targeting multiple cities” since one of my client is located in between two cities Tacoma and Puyallup. What is the best workaround for this? As of the moment, I added the Tacoma and Puyallup, WA area keywords.

Hi, awesome checklist – THX!

May I ask Why is “All external links with no-follow tag” bad?

Hi Olga, I find number 100 quite a prioryty in our organisation 🙂

will read most part later

That was a really fun read!

Hi Olga,

Thank you so much for this detailed article! As a beginner, I could better understand and fix many of the mistakes you mentioned. I will also consider the tips in the future. I must say that this is one of the best SEO articles I have read so far. Well done!

Thanks for this huge and useful list of TechSEO-errors and audit topics! The main feature I appreciate much is the classification and theme categorization. In this way I can prioritize the fixes when detecting. I love it!

Some of these I check on a regular basis, some of them not yet – but surely in future.

Hi Olga. Nicely done. Your article works well as guidelines as it is easy to forget important issues. I lke detailed articles and checklists with real knowledge behind it. I’m sure your guide becomes handy at times. Thank you!

I had to laugh out loud at the AI pictures. Nice article, thank you for that!